Reading Ars Technica this morning, an article on doxing everyone (everyone!) with Meta’s (née facebook) smart glasses. The article is of great import, but I headed over to the linked paper that detailed the process. The authors, AnhPhu Nguyen and Caine Ardayfio, were kind enough to provide links giving instructions on removing your information from the databases linked. Although I imagine it becomes a war of attrition as they scrape and add your data back.

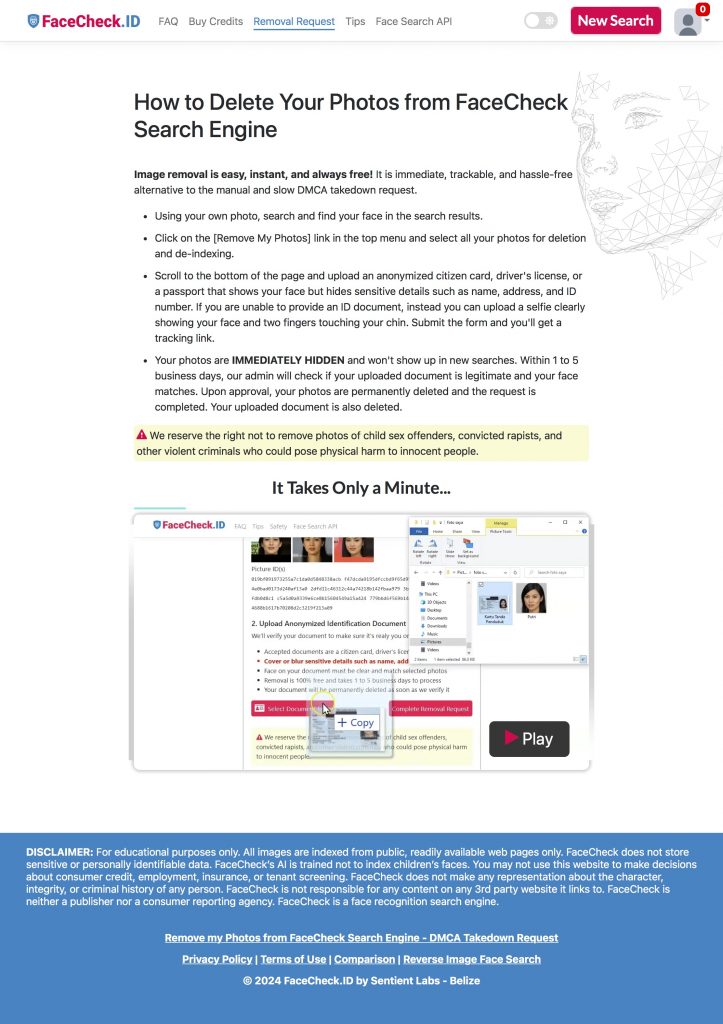

Naturally I followed these links to get an idea of how one would go about removing their data from these services. I was particularly interested with the language on the one service, FaceCheck.id.

To quote the part that stuck out to me:

We reserve the right not to remove photos of child sex offenders, convicted rapists, and other violent criminals who could pose physical harm to innocent people.

Now this is terribly interesting to me. It makes clear the difference between what they purport to sell, or be, or give, and what they actually speaking are. In fact, the contrast is enhanced if only you read down the page a little:

DISCLAIMER: For educational purposes only. All images are indexed from public, readily available web pages only.

Ah, so it’s for educational purposes, but they reserve the right to make sure that some people remain visible, ostensibly in the interests of ‘public safety’. They, of course, are not the courts. They have no information that allows them to assess who presents a risk to others, and even if they did a private entity has no right to do so. Is this valuable in actually protecting people? I am not sold on that. If someone poses a danger then by all means, let the court’s sentencing and probation reflect that.

What is the education here? Should we profile based on those who have been caught? What have we learned through this venture? Surely such a high minded educational site will have peer reviewed research that is advanced through this educational database.

What they do have, what they sell, are the lurid possibilities. Sell the darkness and sell knowing. How can you know if someone is a child sex offender? How can you know if your nice neighbor once beat a man? What if? What if? What if?

You can know who’s a rapist or a ‘violent criminal’. You know your child will be safe, since you check every person they meet. Safety is for sale. Never mind that this likely isn’t the best way to protect children. Never mind the fact they served their sentence, they were violent criminals once. Never mind the racial bias of the justice system. Never mind a case of mistaken identity on these services’ part.

They veil these much baser interests, the interest in profiting off of speculation; sowing distrust and fear, in the cloak of public safety and moral responsibility. Furthermore, the entire public is caught in their dragnet.

I take it as a solid assumption that the “shitty tech adaption curve” is true.

Here is the shitty tech. Who isn’t allowed to opt out now?

Who is next?

[…] was immediately thinking of my previous post upon reading […]

Pingback by Opting Out 2 – Unintended Consequences « MundaneBlog — October 10, 2024 @ 10:44 am

[…] to prognostication, who will be allowed to opt out? To gate access to a room or facility behind such an AI means that the non-normative, the queer, […]

Pingback by Gender Normativity and Facial Recognition « MundaneBlog — November 4, 2024 @ 11:49 am